A Human Rights-driven AI Assessment of Ethical Industry 5.0 Transition and Sustainability in Circular Value Chains

– a paper on the impact of AI on Human Rights and mitigation of related risks.

AI-DAPT and the AI impact on human activities

AI-DAPT aims to bring a data-centric approach to AI, incorporating end-to-end automation and AI-based methods to support the design, execution, observability, and lifecycle management of robust, intelligent, and scalable data-AI pipelines. The project aims to implement a novel AIOps/intelligent pipeline lifecycle framework, contributing to sophisticated, Explainable AI-driven data operations and collaborative feature engineering. Its goal is to reinstate the importance of pure data-related work in AI and reinforce AI solutions’ generalizability, reliability, trustworthiness, and fairness. Since the project is so focused on AI, the impact that artificial intelligence has on human beings and their fundamental rights is a central theme to which the project partners pay the utmost attention.

At this point in the evolution of artificial intelligence, we are all aware of AI’s impact on human activities in various fields. We are talking about both positive and negative effects, particularly regarding human rights: think about how AI can improve access to information, health services, education, and legal aid, especially in underserved communities. AI-powered chatbots and virtual assistants have the potential to provide vast amounts of legal advice, medical consultations, and educational support. And it’s not just limited to that. AI can also advance health and safety by helping detect diseases early, predict outbreaks, and personalize treatments, ultimately improving public health outcomes. In terms of safety, AI can enhance disaster response through predictive analytics and real-time monitoring. Let’s not forget about economic development: AI has the power to drive economic growth by automating mundane tasks, optimizing supply chains, and creating new job categories. This can indirectly improve the standard of living and reduce poverty, contributing to realizing economic and social rights. And, of course, transparency and accountability are critical. AI systems can monitor government actions, identify corruption, and enhance transparency. Predictive policing and fraud detection systems can help in maintaining law and order. The potential impact of AI in so many different areas is truly remarkable!

Nevertheless, there are also negative impacts that must be considered and avoided. AI-driven surveillance and data collection can lead to significant privacy breaches. Facial recognition, social media monitoring, and data mining can result in unauthorized access to personal information, violating the right to privacy. AI systems can perpetuate and amplify existing biases if trained on biased datasets. This can lead to discriminatory practices in hiring, law enforcement, credit scoring, and other areas, adversely affecting marginalized groups. Automation through AI can displace workers, leading to unemployment and underemployment. This economic displacement can undermine the right to work and the right to an adequate standard of living. Also, freedom of expression and information can be affected: AI algorithms used by social media platforms and search engines can manipulate information, censor content, and create echo chambers, affecting access to diverse information. And again, decisions made by AI systems can be opaque and complex to challenge, especially when algorithms are proprietary. This lack of transparency can impede justice and the right to a fair trial.

There are already mitigations to address AI’s adverse effects, such as promoting transparency in AI decision-making processes and making AI systems explainable and accountable or ensuring diversity in data collection and algorithm training to minimize biases and discrimination. Most importantly, there are regulations and robust legal frameworks to ensure that AI technologies comply with human rights standards.

Regulations in place

As of today, the European Union has implemented several regulations, as well as legal and ethical sources to ensure that AI technologies comply with human rights standards, and others are underway.

Starting from the Ethics guidelines for trustworthy AI, which, already in 2019, put forward a set of key requirements that AI systems should meet to be deemed reliable, followed by the Assessment List for Trustworthy Artificial Intelligence (ALTAI) issued in July 2020 when the High-Level Expert Group on Artificial Intelligence (AI HLEG) presented their final Assessment List for Trustworthy Artificial Intelligence.

In March 2024, the European Parliament approved and launched the AI Act. The law results from an agreement with the member states after months of joint work and is the first comprehensive regulation of artificial intelligence. This act classifies AI systems into different risk levels, with stringent requirements for high-risk applications. It mandates transparency, data governance, and risk management measures to minimize bias and ensure accountability. For example, high-risk AI systems must use representative data sets to reduce biases and undergo thorough documentation and evaluation. The AI Act prohibits certain practices, such as using AI for real-time biometric surveillance in public spaces, cognitive behavioral manipulation, and social scoring. It also imposes strict rules on the transparency and accountability of AI models, particularly those with systemic risks.

Additionally, besides other instruments relevant to AI, the EU is defining the AI Liability Directive, a proposed directive that ensures that individuals harmed by AI technologies can seek compensation.

This framework provides legal clarity on the responsibilities of AI developers and users.

In line with European directives, researchers in the field are studying the issues and seeking practical solutions to minimize negative impacts and ensure the effectiveness of AI.

Adding value to the regulations: “A Human Rights-driven AI Assessment of Ethical Industry 5.0 Transition and Sustainability in Circular Value Chains.”

In this blog, we would like to bring to the general public the methodology developed in a paper by some partners of the AI-DAPT project. The paper is titled “A Human Rights-driven AI Assessment of Ethical Industry 5.0 Transition and Sustainability in Circular Value Chains”. It was written by Marina Da Bormida in Cugurra (S&D), with Sotiris Koussouris (Suite5) and other researchers within the context of another project funded by the H2020 -Circular TwAIn.

This paper emphasizes the importance of protecting human rights in developing and using AI tools, especially those that promote sustainability in manufacturing. It suggests employing a thorough Human Rights Impact Assessment (HRIA) that goes beyond traditional methods to address risks related to AI regulations and ethical principles proactively. The proposed HRIA was tested in three pilot cases within the Circular TwAIn project, which highlighted the effectiveness of the proposed HRIA in gaining valuable insights.

The paper has a substantial impact on AI-DAPT for two reasons:

• The first one is that AI-DAPT will be validated through application to real-life problems in industries such as Health, Robotics, Energy, and Manufacturing, as well as integration into different AI solutions currently available in the market, so in real environments, which are very close to the content and use cases of the Circular TwAIn project.

• The second one is that the paper is a strong example of ethical AI development and how researchers can effectively incorporate ethical guidelines and human rights principles into AI system design and deployment during project implementation.

The proposed methodology connects human rights principles with the essential requirements for trustworthy AI systems, as outlined in the Ethics Guidelines for Trustworthy AI and the AI Act. This approach aims to assist AI developers in assessing and enhancing the alignment of their systems with these requirements in a rights-respecting manner. Doing so helps prevent potential harm and maximizes the technology’s benefits.

The model is designed to proactively prevent human rights violations and ethical risks while promoting human empowerment and well-being, and its distinguishing feature sets it apart from other frameworks.

A multidisciplinary team has comprehensively analyzed and assessed diverse AI-driven solutions, including AI developers, data scientists, HR professionals, and ethics experts. This approach considers the interconnected ethical, social, and technical factors while identifying necessary measures to mitigate existing and future harms.

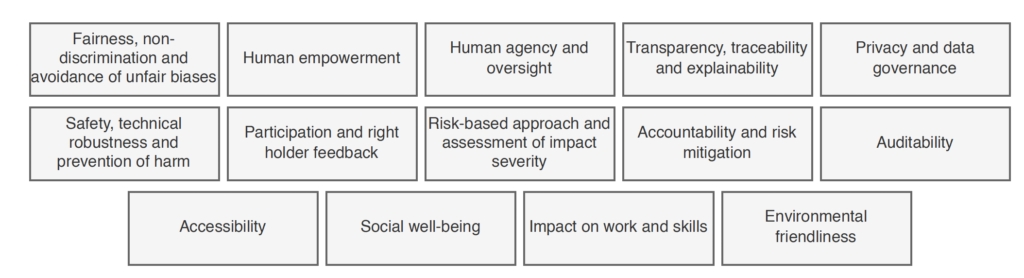

The model includes 14 key elements related to fairness, non-discrimination, and avoiding unfair biases, all of which are vital in AI systems. These elements involve treating individuals and groups equally, addressing historical biases, and ensuring inclusive and gender-responsive engagement processes. It’s crucial to consider these features when implementing new AI applications. The selected 14 criteria cover several dimensions, as it is shown in Figure 1:

Figure 1: Dimensions and topics covered by the proposed HRIA

The 14 elements are related to:

- Fairness, non-discrimination, and avoidance of unfair biases that are crucial in AI systems. This involves treating individuals and groups equally, addressing historical biases, and ensuring inclusive and gender-responsive engagement processes.

- Human empowerment encompasses enhancing the degree of autonomy and self-determination to become stronger and more confident.

- Human agency and oversight in the sense that the AI system should support human autonomy and human decision-making, as well as the impact of such systems on human affection, trust, and (in)dependence should be assessed, together with their effects on human perception and expectations

- Transparency, traceability, and explainability refer to the system’s transparency. This includes its comprehensibility to external observers (interpretability) and its understandability to non-experts, especially those directly or indirectly affected (explainability). Traceability involves the system’s ability to track the journey of data input and related processes throughout all stages of sampling, labeling, processing, and decision-making during the development of the AI system.

- Privacy and data governance are essential topics when considering the possible impact of the AI system on privacy and data protection.

- Safety, technical robustness, and prevention of harm are a must. The AI system behavior and data accuracy must be verified and monitored to guarantee human beings’ safety and prevent adversarial attacks.

- Participation and right-holder feedback concerns the meaningful engagement and feedback from affected or potentially affected rights-holders, duty-bearers, and other relevant stakeholders/parties.

- The AI Act relies on a risk-based approach to assess the severity of impacts. When considering and addressing these impacts, it’s crucial to take into account the severity of their consequences on human rights, including their scope, scale, and irreversibility.

- Accountability and risk mitigation are concepts linked with the responsibility for one’s own actions. Mechanisms must be implemented to ensure responsibility for developing, deploying, and using AI systems. This includes the ability to report on actions or decisions contributing to the AI system’s outcome and respond to the consequences of such an outcome. Accountability is closely related to risk management. In the case of unjust or adverse impacts, adequate possibilities for redress should be ensured.

- Auditability refers to an AI system’s capability to assess the system’s algorithms, data, and design processes. This can be achieved by ensuring traceability and logging mechanisms from the early design phase. When it is necessary to prioritize actions to address risks and impacts, the severity of potential human rights consequences should be the core criterion (mitigation hierarchy).

- Accessibility in AI refers to creating systems that can be used by people with diverse needs, characteristics, and capabilities, following Universal Design principles. AI must be user-centric, allowing people of all ages, genders, abilities, and characteristics to use AI products and services, including those with disabilities.

- Social well-being includes all kinds of considerations about the possible positive and negative impacts expected from the AI system’s deployment for individuals (operators, customers, technology providers, etc.) and society and how to respond to any possible threat.

- AI systems may change the work environment and impact workers, their relationship with employers, and their skills. It’s important for AI systems to support humans in the workplace and to contribute to creating meaningful work.

- Finally, the AI system’s potential negative or positive impact on the environment should be considered. AI systems must operate in the most environmentally friendly way possible. The development, deployment, and use of the AI system and its entire supply chain should be assessed. For example, it is essential to consider resource usage and energy consumption during training and choose options with a lesser net negative impact.

The mentioned criteria are complementary, and each can vary in relevance across different application contexts and use cases.

The proposed Human Rights Impact Assessment (HRIA) model takes a comprehensive approach to addressing AI’s potential threats to human rights. By recognizing the multifaceted, intersectional, and dynamic nature of the harm caused by AI, this model can effectively address and mitigate the multiple human rights concerns associated with AI. This approach aims to shift the focus towards using AI as a tool to enhance human rights rather than causing harm, particularly in the manufacturing sector but also in other areas, and we believe it represents a further improvement compared to the legal frameworks that are currently in place.

Conclusions

The dual nature of AI’s impact on human rights underscores the need for a balanced approach that maximizes benefits while mitigating risks through thoughtful policy, ethical considerations, and technological design.

Thus, anticipated developments in human rights regulation and AI applications are expected to be shaped by critical trends and factors in the coming years. This evolution will likely be influenced by the leadership of organizations such as the United Nations and the European Union, which are at the forefront of setting international standards for AI and human rights.

Additionally, there will be an emphasis on global cooperation and partnership in European research projects to establish harmonized regulations, ensuring that AI technologies adhere to universal human rights standards across different jurisdictions. AI-DAPT will be at the forefront, contributing to future regulations.